CloudPets - another internet toy hack

I recently read about the sad saga of CloudPets. It's a nice idea: in a perfect world, maybe it would be a positive example of the novel ways that the internet lets us keep in touch with those we care about. But no. Instead, it's another disastrous example of "what not to do for security".

A lot of things went wrong here. Some of them were obvious (like, "don't make your database publicly accessible to the internet"), some of them weren't. Even the obvious ones have some good points to discuss, though.

Let's start with the glaringly obvious. The database was publicly accessible over the internet without any authentication. Embarrassingly negligent? Absolutely. But it's also an embarrassment that this is the default configuration of MongoDB:

“If installed on a server with the default settings, for example, MongoDB allows anyone to browse the databases, download them, or even write over them and delete them.”

Wow. And this, friends, is why MS SQL Server is a nuisance to set up the first time…because the alternative is far, far worse. Of course, that's because Microsoft also learned this lesson the hard way, for example via the SQL Slammer worm.

1. Do you need to listen over the network?

SQL Server doesn't listen over the network by default. Yes, this makes it a slight nuisance to set up if you do want to access it over the network. But the "opt-in" nature is much more secure. Of course, generally opt-in needs to be balanced with usability, but in this case, it is a good call. Consider the two options:

- SQL is listening on the network

- You want to use it over the network. Great! Life is easy.

- You don't want to use it over the network. Not great! You have an increased network surface area for no reason.

- SQL is not listening on the network

- You don't want to use it over the network. Great! You don't pay a security cost for something you don't need.

- You want to use it over the network. Minor headache, possibly some troubleshooting.

Let's consider the worst-case scenario for both options. In the first case—well, we've seen the worst-case scenario. Bear in mind, certain software is more dangerous to expose than others. Databases can reach "crown-jewel" level — not exposing that over the network is a big win. Moreover, there are certainly a lot of cases where we don't need the database to have network access, so this is a legitimate configuration (if, say, 95% of all databases needed to be network-accessible, it wouldn't be as significant a win). Indeed, the CloudPets database was apparently hosted on the same box as the web server (we'll get to that). Developers, testers also don't need their local databases to be visible outside their machines.

What's the worst-case scenario for the other option? Troubleshooting and configuration headaches when you first set it up.

2. Defense in depth: firewalls.

So, fine. The database is listening to connections from the network. Why is the internet able to connect to it? This is another astonishing embarrassment. There should have been a perimeter firewall (the conceptual equivalent to your home router) that is only letting in traffic on ports that specifically need it (e.g. HTTP/S).

That's not all, though. Raise your hand if you keep the Windows Firewall enabled. You should. Again, it's a minor nuisance to open ports, but it could save you if someone plugs an infected laptop into the network. Or runs a virus-laden attachment on their computer that spreads over the LAN. This could have also protected the database here.

And let's say you do need to open up the database to outside machines. You can limit that to only machines on the LAN:

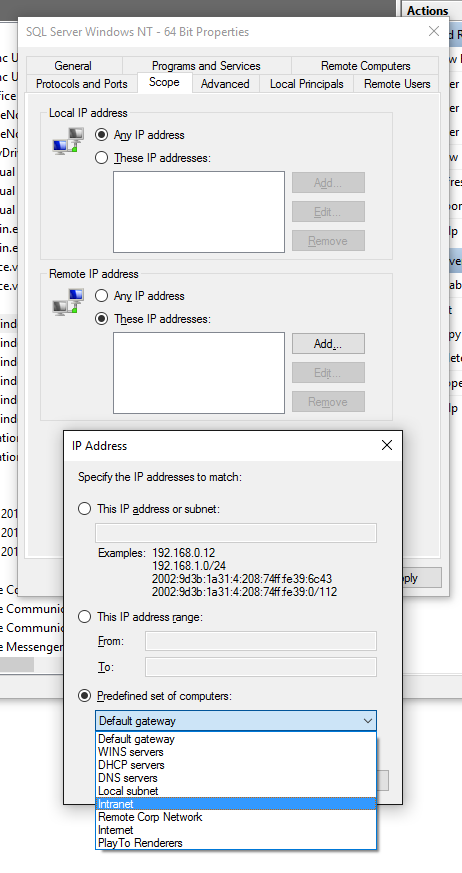

Windows Firewall lets you set the scope of a rule, so you can only allow intranet traffic for example.

3. Defense in depth: account segregation.

This setup involved both the database and the web servers being on the same machine. That's not intrinsically bad, but you'll want to be careful. Specifically you want to avoid single points of failure/compromise. Let's say there's a vulnerability in the web server software that grants access to the machine (via the account that's running the web server). You don't want to be able to compromise the database as well.

This is tricky, of course: if the web server can access the database to use it, obviously to some degree compromising the web server will get some access to the database. But, for example, it shouldn't result in getting an entire dump of the database, nor deleting the database, etc. So:

Don't use the same accounts for the web server and the database. They should be running under different user accounts. Compromise of one user account should result in limited damage.

Minimize the privileges of the account running the software.

Don't run it as Admin/SYSTEM. Set up the ACLs you need.

Limit the access of the web server account to the database. Don’t be lazy and just give full db_owner permission to the web server account! It should probably only have read/write access to the particular database it needs.

Note that with IIS, each application pool, by default, has its own identity/pseudo-user. You can assign ACLs to "IIS App Pool\AppPoolName". This won't show up in the UI; you have to type it in. But it will work with file permissions and MS-SQL Windows Authentication. Very powerful, very handy (no need to set up and track your users/passwords on your own). More information about IIS App Pool Identities.

As an aside, don't install unnecessary things on servers. PDF reader, Firefox, etc...it's a server, you should not be using it for these tasks.

Anyway, if you can, separating the machines can be even better. Assume the web server machine gets owned completely.

4. Defense in depth: machine segregation

In the previous point we talked about the compromise of the user account running the web server. However, let's take that up a notch and assume the worst, and assume the server as a whole is compromised. If the database is running there too, you're in more trouble than if it's on a separate machine. I won't go too much into depth about this, but I want to make the related point that if you have encrypted data, and the decryption key is on the same machine...single point of failure.

Up until now, we've been focused on securing the infrastructure. But assume the data will be compromised. How do we mitigate that?

5. Proper password storage

They did this right: they stored bcrypt hashes of the users' passwords (don't forget to salt them too). That was good. As the post mentions, though, they didn't enforce any requirements. That's not so good.

Also while we're here, never check secrets (like service passwords or decryption keys) into source control. Alternatives for ASP.NET storage of secrets

6. Proper file access

They did this wrong: all files were (are?) publicly accessible. Knowing the URLs in the database is enough (database was a single point of failure here). One way they could have handled this is to lock down the S3 bucket (web server has credentials: don't store them in source control!), and have the web server relay the files; you'd need to be authenticated to the web server to get any data. The downside is you have to spend resources/bandwidth to go through the server.

S3 also has a feature where you can create temporary public-access URLs that expire after a predefined time. This would have been a viable option. The files remain locked down in S3 (not publicly accessible), and when a file is requested, the web server asks AWS for a temporary public-access URL. Of course anyone who knows this URL can access the file, but the risk is reasonable, because it's a temporary URL that isn't stored and isn't easily guessed. You can see an example in StackOverflow here.

7. Bonus: use extra care for personal information

Most people automatically hash passwords, but storing personally identifiable information in plaintext is also not a good thing. Leaking people's names, addresses, birthdays, security questions, etc, can leave them open to identity theft. Consider encrypting them, or storing them separately. Even if they're just stored in a separate database alongside the main one, and there's no SELECT access to the tables, only access to a "get a specific record by id" function, that limits the possibility of a bulk exposure.

8. Humans and procedures

People tried to warn CloudPets about the database issue, but they couldn't get through or were ignored. If a good Samaritan found a hole in your setup, would they be able to report it to you?

Other internal procedures matter too, of course, but let's not get overwhelmed right now: often it takes a very small amount of time and effort to drastically improve the resilience of a system, and perhaps more importantly, to minimize the possible scope of a breach. This took me around two hours to write up.

Do you have a public-facing web service? Has it been looked at from a security perspective? Maybe it's worth spending some time to review its design. (We can help!)